Publications

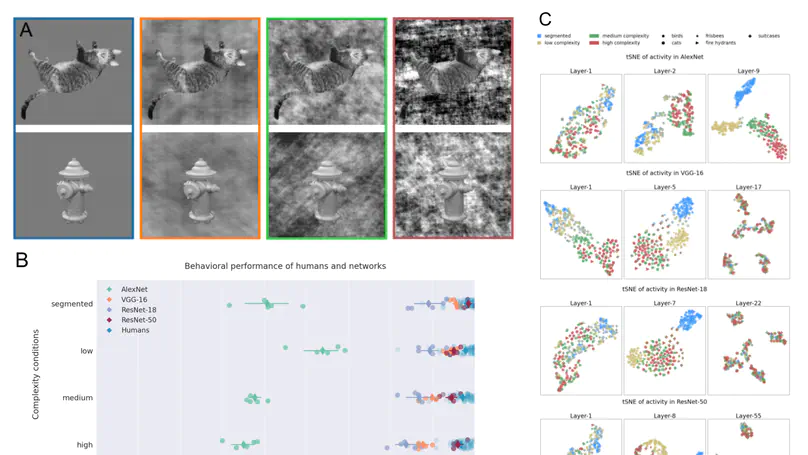

By analyzing human EEG responses and DCNN activations towards objects set against varying backgrounds, we found that early EEG activity and initial DCNN layers primarily represent object background, not category. This indicates that both human visual processing and DCNNs prioritize background segregation in object categorization. Our findings highlight the crucial role of object background in both human and DCNN object recognition processes.

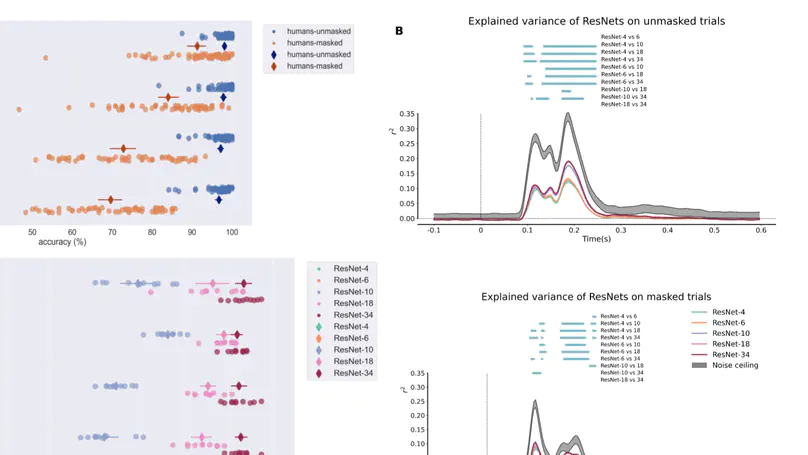

We explored the effectiveness of deep residual networks (ResNets) in simulating human brain processes during visual tasks. Specifically, we investigated whether deeper ResNets, which are essentially feedforward networks with an excitatory additive form of recurrence, can accurately represent feedback in the human brain. We compared the responses of human participants and ResNets in an object recognition task under both visually-masked and unmasked conditions. Our results showed that deeper ResNets could explain more variance in brain activity compared to shallower networks, supporting the idea that excitatory additive recurrent processing in ResNets captures aspects of feedback processes in human brain, demonstrating their potential in modeling human visual perception.